Your mobile app referral program is live, but is it performing at its peak? You might think a “$10 credit” is the perfect incentive, but what if a “Free Month of Premium” could double your conversion rate at half the cost? The only way to know for sure is to test. The best way to A/B test a referral program is by moving beyond guesswork and systematically experimenting with its core components.

This guide provides a practical, data-driven framework for running effective experiments on your mobile referral program. We will treat referral program optimization as a scientific process, giving you a clear plan for what to test, how to implement the test technically, and how to measure the results to find a clear winner.

Table of Contents

Why You Must A/B Test Your Referral Program

Running a referral program without A/B testing is like flying blind. You might be getting results, but you have no way of knowing if you’re leaving significant growth on the table. A structured testing process provides clear benefits:

- Move from Assumptions to Data: Replace “I think this will work” with “I know this works because the data proves it.”

- Increase ROI: Discover the most cost-effective incentive. You might find that a smaller, non-cash reward performs just as well as an expensive cash bonus, dramatically improving your program’s profitability.

- Improve User Experience: By testing different offers and messaging, you learn what truly motivates your users, allowing you to create a more compelling and engaging program.

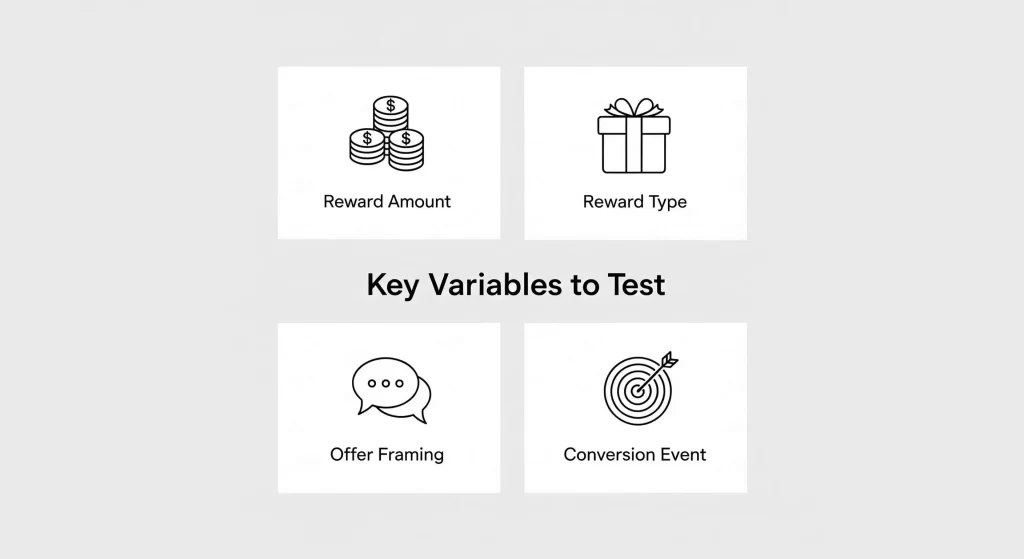

What to Test: A Framework of Referral Incentive Ideas

A successful referral program optimization strategy involves testing one variable at a time to get clean results. Here are the four most impactful areas to test, with plenty of referral incentive ideas to get you started. For more inspiration, check out these 15+ Mobile App Referral Program Examples.

1. The Reward Amount

This is the most obvious but often the most impactful way how to A/B test a referral program. A small change in value can lead to a big change in performance.

- Test A: $5 Credit

- Test B: $10 Credit

2. The Reward Type

This test helps you understand what your users truly value. Is it cash, or is it a better experience with your product?

- Test A: 10% Discount on Next Purchase

- Test B: Free Month of Premium Subscription

3. The Offer Framing

The psychology of your offer matters. How you present the same value can dramatically alter its perceived appeal.

- Test A: “Give $10, Get $10” (Focuses on the transaction)

- Test B: “Give Your Friends a $10 Gift!” (Focuses on generosity)

4. The Conversion Event

This test helps you balance friction and user quality. When is the reward actually triggered?

- Test A: Reward granted on new user sign-up.

- Test B: Reward granted on new user’s first purchase.

The Technical Framework: How to A/B Test a Referral Program with Tapp

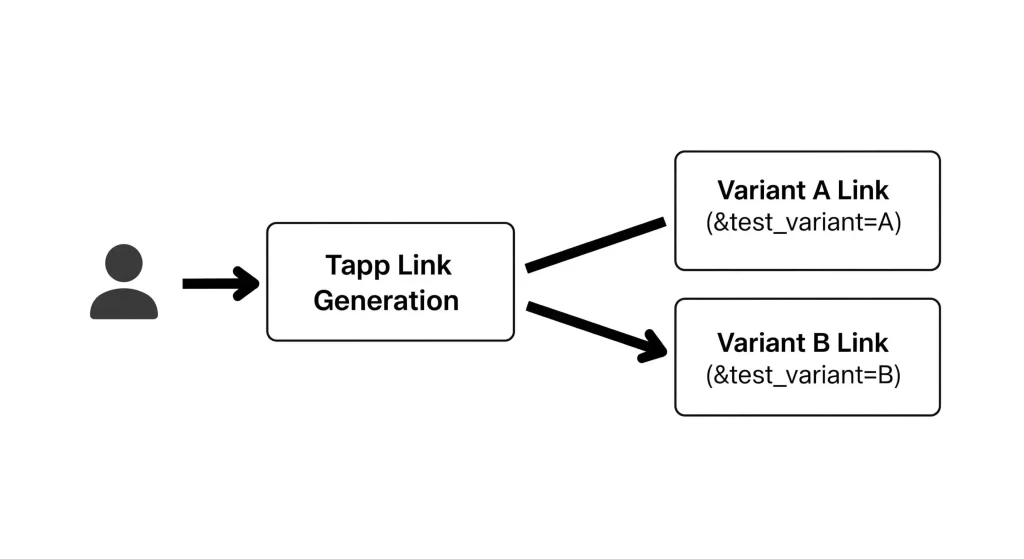

To accurately A/B test a referral program in a mobile app, you need a tool that can reliably segment users and track the performance of each variant through the entire mobile funnel, including the App Store. This is impossible without a robust attribution system.

Tapp provides the perfect infrastructure for this. The technical flow is simple:

- Segment Your Users: In your app, divide your users into two groups, A and B is a good way for how To A/B test a referral program.

- Generate Variant Links: When a user from “Group A” shares their referral link, make an API call to Tapp and include a custom parameter identifying the variant.

When a user from “Group B” shares, generate a link with a different parameter.// For a user in Group A POST api.tapp.so/v1/links { "params": { "referrer_id": "user123", "test_variant": "A" } }// For a user in Group B POST api.tapp.so/v1/links { "params": { "referrer_id": "user456", "test_variant": "B" } } - Attribute the New User: When a new user installs and opens the app, the Tapp SDK will retrieve the `test_variant` parameter along with the `referrer_id`. You can now associate this new user with the correct test group in your backend.

- Track Conversions: When the new user completes the target action (e.g., makes a purchase), you fire a Tapp tracking event. This event will be automatically associated with the correct test variant.

Measuring Success: The Key Metrics

Once your test has run long enough to achieve statistical significance, you can analyze the results. By filtering your analytics by the `test_variant` parameter, you can directly compare the performance of each offer across the key metrics.

Invite Rate

Question: Did one offer encourage more users to share?

Calculation: `(Users who sent at least one invite) / (Total users in the test group)`

If Variant B has a significantly higher invite rate, its offer framing or value is more compelling to your existing users.

Conversion Rate

Question: Did one offer convert new users more effectively?

Calculation: `(New users who completed the target action) / (Total new users who installed from that variant’s links)`

If Variant A has a higher conversion rate, its offer to the *new* user is more effective at driving them to activate.

Cost Per Acquisition (CPA)

Question: Which offer was more profitable?

Calculation: `(Total cost of rewards paid out for a variant) / (Number of new activated users from that variant)`

This is your ultimate success metric. A variant might have a lower conversion rate but be far more profitable if its reward cost is significantly lower. This is the core of successful referral program optimization.

Frequently Asked Questions (FAQ)

How long should I run an A/B test on my referral program?

You should run the test until you reach statistical significance, which means you have enough data to be confident the results are not due to random chance. For most apps, this will take at least two to four weeks. Use an online A/B test calculator to determine the sample size you need based on your baseline conversion rate.

What is the most important thing to A/B test first?

Start with your biggest assumptions. If you are unsure about the core value of your reward, test the **Reward Type** first (e.g., Cash vs. Premium Feature). If you are confident in the reward type but not the amount, test the **Reward Amount**. Focus on the tests that have the potential for the biggest impact.

Can I test more than two variants at once?

Yes, this is called multivariate testing. You can test multiple variants (A, B, C, D) at the same time. However, be aware that this requires significantly more traffic to reach statistical significance for each variant, so it’s generally recommended for apps with a large user base.

How does this differ from testing other marketing campaigns?

The core principles are the same, but the technical complexity is higher for a mobile A/B test referral program. You must have a tool like Tapp that can reliably pass tracking data through the App Store to connect the initial share from an existing user to the final conversion from a new user.

Conclusion

Continuous referral program optimization is the key to unlocking its full growth potential. A structured approach to A/B testing allows you to move from guesswork to data-driven decisions, systematically improving your program’s performance and profitability over time.

However, none of this is possible without a reliable data foundation. To confidently A/B test a referral program, you need a powerful attribution tool like Tapp that provides the granular, user-level data required to segment users and measure results with accuracy. Data-driven testing is a cornerstone of effective app growth.

Stop guessing what works. Sign up for your free Tapp account and start A/B testing your referral incentives with data you can trust.

To review the fundamentals of building your how to A/B test a referral program, read our Complete Playbook for Building a High-Growth Mobile App Referral Program.